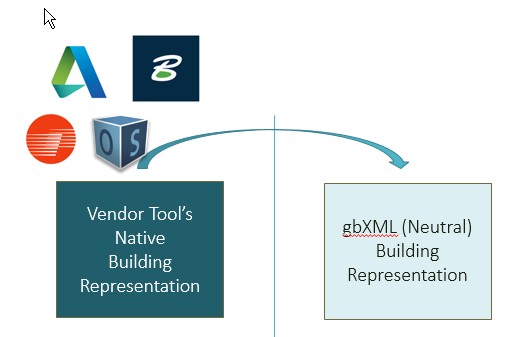

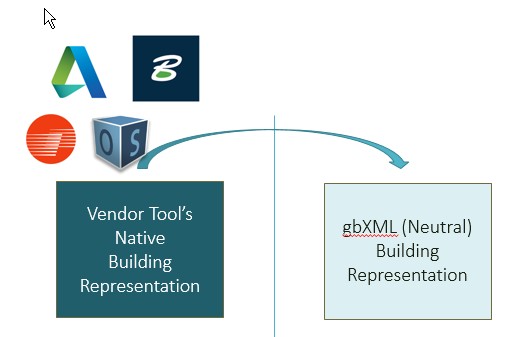

Carmel Software is a member of the Board of Directors of gbXML.org which houses the Green Building XML open schema. This schema helps facilitate the transfer of building properties stored in 3D building information models (BIM) to engineering analysis tools, all in the name of designing more energy efficient buildings.

For example, an architect who is designing a building using a BIM tool such as Autodesk Revit is able to not only create the building geometry, but also assign properties to the building including lighting density, occupancy hours, wall and window properties, and much more. All of this information can be exported (or “saved-as”) as gbXML file that is then imported into a software analysis tool that predicts yearly building energy usage (such as Trane TRACE). The purpose of this integration is to eliminate the need to enter the same information into the analysis tool that is already available in the BIM tool.

It has proven so popular that almost 40 software tools world-wide have some type of gbXML integration. Click here for the list.

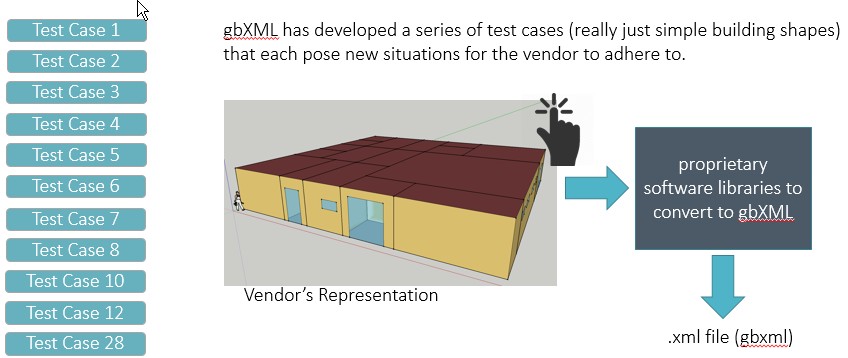

Recently, we have been funded by the US Department of Energy and National Renewable Energy Lab (NREL) to develop a suite of “test cases” that software vendors will need to undergo to become certified in gbXML. Certification is a process that gives vendors who produce gbXML a stamp of approval from gbXML.org:

Achieving Certification

How does a vendor achieve certification? gbXML.org has developed a series of test cases (in fact, just really simple building shapes) that each pose a new situation for the vendor to adhere to. In other words, the vendor is responsible for recreating a simple building shape in their own software tool. Then, they “save-as” gbXML and upload it to our validator software. If the building shape closely matches the test case gbXML file, then it “passes”:

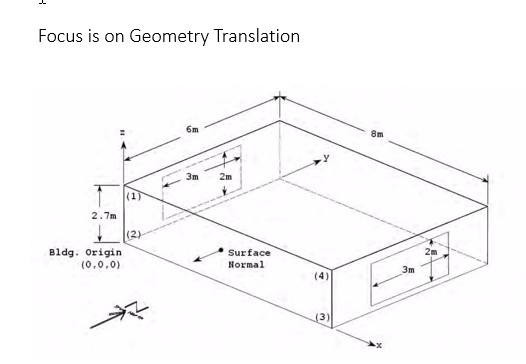

What is being tested? For each test case, there are a number of items being “tested”, and it is all focused on geometry:

- Are

all the surfaces in the right location and orientation? - Are

all the surfaces properly defined? Walls

are walls, floors are floors, interior/exterior etc. ? - Is

the gbXML “good”? i.e. – No typos, incorrect names, missing

fields.

Example Test Cases

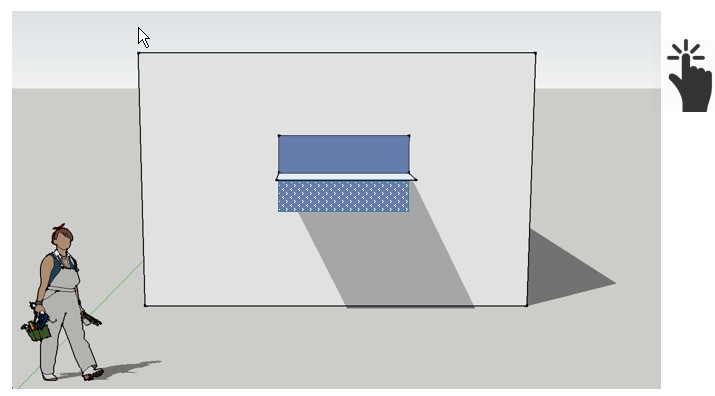

Test Case 2: A window test case (see image below)

- Single

window is drawn - User

converts to gbXML - Two

windows are created - Overhang

is linked to both windows.

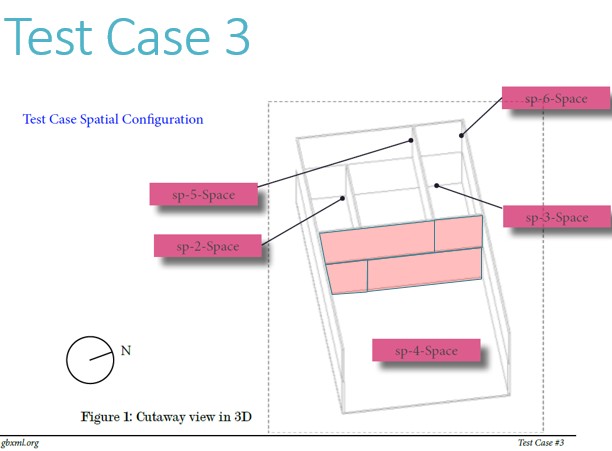

Test Case 3: A test case for spatial configuration. If a tool was to submit for compliance, but is not touting its automated capaibilities, then the wall types would be declared explicitly and exported to gbXML and submitted for compliance.

If a tool was to submit for compliance, but is advertising automated capabilities, then the wall types would not need to be called out, and the translation to a thermal representation of the building in gbXML would happen automatically:

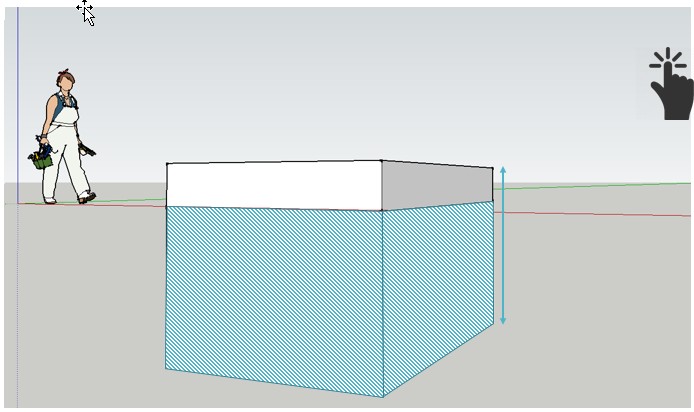

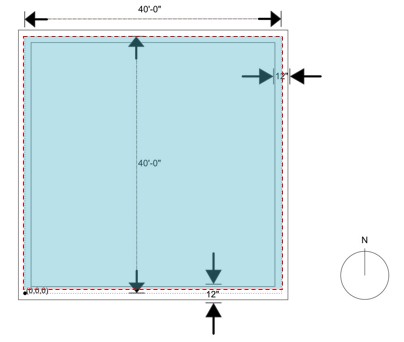

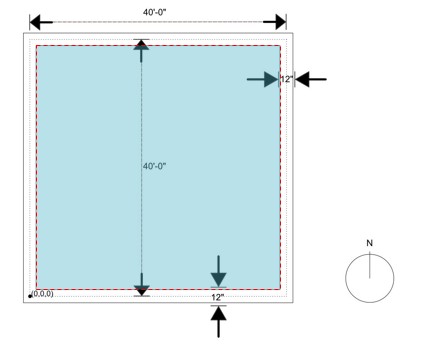

Test Case 5: Here is an example of a test case (see image below):

- Single

volume is drawn - User

converts to gbXML - Walls

automatically separated - gbXML

shows 8 walls (4 above and below grade)

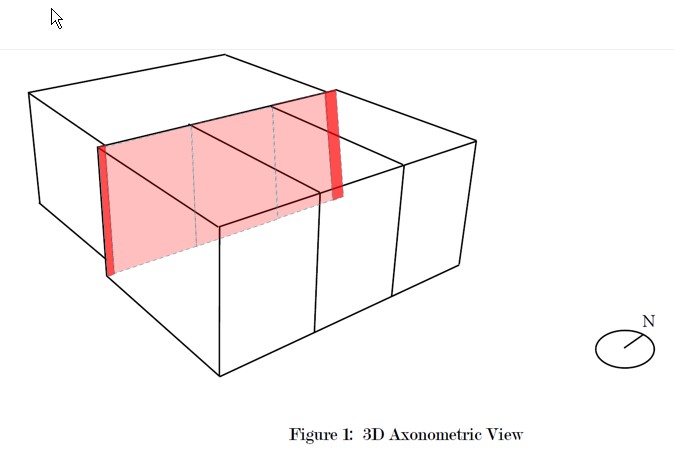

Test Case 6: If a tool was to submit for compliance, not touting automated capabilities, then the wall types would be declared explicitly, and then exported to gbXML and submitted for compliance.

If a tool was to submit for compliance, but is advertising automated capabilities, then the wall types would not need to be called out, and the translation to a thermal representation of the building in gbXML would happen automatically:

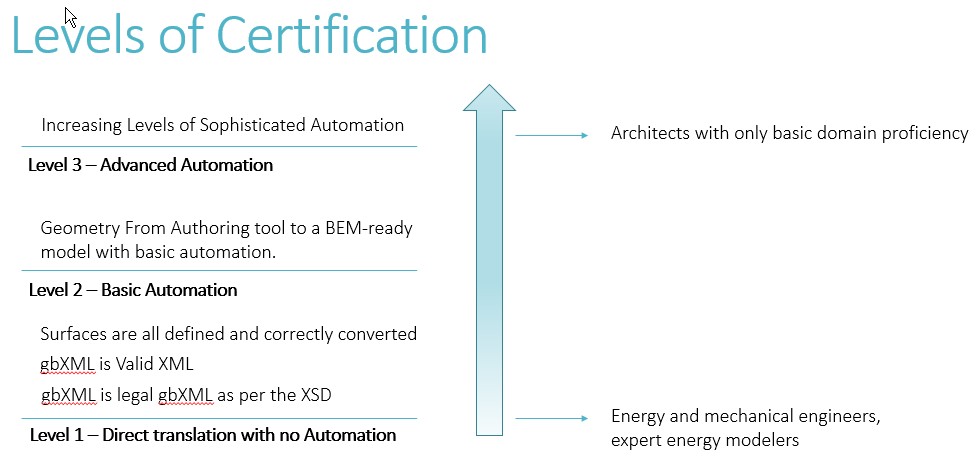

Levels of Certification

There are 3 levels of gbXML certification:

- Direct translation with no automation

- Basic automation where:

- Surfaces are all defined and correctly converted

- gbXML is valid XML

- gbXML is legal gbXML as per the XSD

- Advanced automation where geometry from the authoring tool to a BEM-ready model with basic automation

Level 1 and Level 2 test cases are essentially the same. The only difference is whether the tool makes it easier, through automation, to identify surface types without the user having to do it manually.

Level 3 test cases are new industry reach goals. No tools on the market today can achieve all of the Level 3 Test Cases. These are advanced benchmarks.

The total number of test cases is in flux. We expect that our user community will send emails asking for more test cases when they find a flaw in vendor software.

All test cases must be passed to achieve a full “Level 1”, “Level 2”, etc. certification.

See the image below for more information on the levels of certification.

Example of Certification Process

Our first validation customer has been the National Renewable Energy Lab (NREL). We have been working with their OpenStudio software tool which works on top of EnergyPlus to calculate yearly building energy usage. OpenStudio has enountered some difficulty in passing all of the tests. The reasons for this are two-fold:

- The nature of some of the test cases are beyond OpenStudio’s intended scope

- The validator has also been found to be too stringent

Some of these stringency issues include:

- No allowance for metric units, or mixed metric and I-P units

- Ambiguous acceptance of interior ceilings or floors

- Does the azimuth and lower left hand coordinate truly have meaning for horizontal surfaces?

- Handling geometry from egg-shell tools (e.g. – SketchUp, FormIt

- Handling geometry from tools with Interior Surface enumeration (Bentley)

- Better abstraction of wall polygons

Handling Different Types of Geometry

Handling Geometry From Egg-Shell Modelers (SketchUp, FormIt):

If all surfaces and zones are drawn on the centerline:

- The surface vertices will be correct (because surface vertices are defined at the centerline)

- But the area and volume calculations will be incorrect (when compared to a thick wall representation). Areas and volumes, by definition, must take into account wall thicknesses.

Solution: Tweak the validation process.

We will make special considerations for the

area and volume calculation if the vendor is

submitting for a geometry modeler like this.

Handling Geometry From Interior Surface Modelers (Bentley):

If all surfaces and zones are represented as interior:

- The surface vertices will be incorrect (because surface vertices are normally defined at the centerline)

- But the area and volume calculations will be correct (when compared to a thick wall representation). Areas and volumes, by definition, must take into account wall thicknesses.

Solution: Tweak the validation process.

We will make special considerations for the

area and volume calculation if the vendor is

submitting for a geometry modeler like this.

OpenStudio Validation Findings:

OpenStudio has encountered difficulty passing all the tests.

Primary:

- The nature of some of the test cases are beyond OpenStudio’s intended scope

- The validator has also been found to be too stringent

Secondary:

- The current validation documentation could be improved, with more clarity

- Validator interface could be improved to clarify why the test is passing or failing

gbXML is Proposing Changes to Simplify and Clarify Compliance:

OpenStudio has encountered difficulty passing all the tests.

Findings:

- The nature of certain test cases is beyond OpenStudio’s intended scope

- The validator is too stringent

- Documentation needs to clarify what is being tested

- Validator interface could improve to clarify why a test passed or failed

gbXML’s forward-looking position:

- The number of test cases required to pass needs to decrease

- The validator requirements will relax

- Documentation is to be improved, and put online

- Validator interface is also going to be improved to be more clear